OMS order tracking

Gonna be abit of a long write.

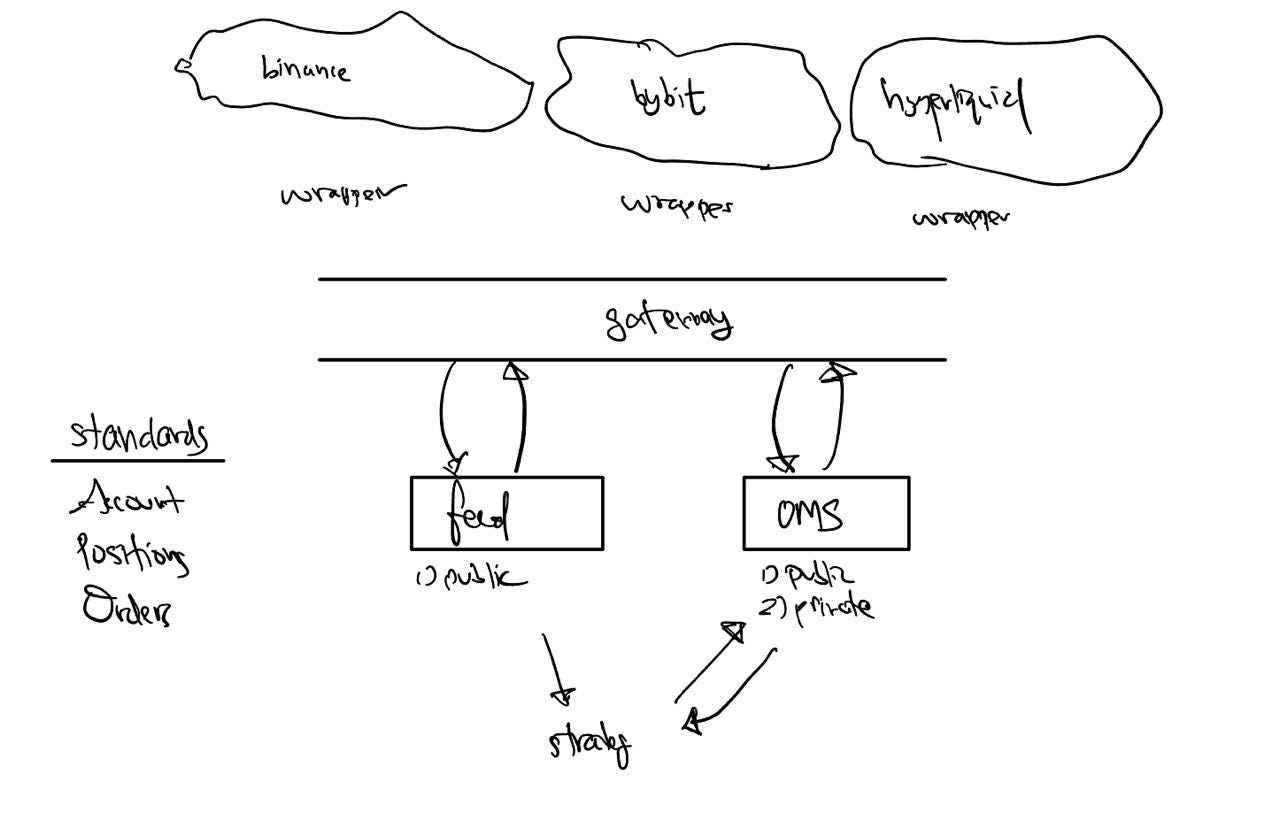

In the last post, we heralded towards development of the OMS and data feeds as middleware between the gateway connector and your trading strategy:

we will walk through the development and iteration of that as we go - with tripartite goals:

i) my walkthroughs should give you a good understanding as to design choices

ii) A thorough ‘How-to-Use’ guide.

iii) if not i), then it minimally walks through some thought processes and tricks - which you can use in building your own oms

That being said, let’s explore a sub area of the OMS which is order state management. Our OMS shall keep track of a finite number of states. Binance order states are as such:

but this is non-exhaustive from the perspective of our trading agent. Some examples would be PENDING (orders created but not yet acknowledged), PENDING_CANCEL and so on…

In many market making scenarios one might cancel an order that has not yet arrived/acknowledged by the exchange, so this state is local to our agent. Additionally, knowing whether we just submitted an unacknowledged order at level X prevents us from making duplicate submissions.

A slight complication is that different exchanges support different states, here is hyperliquid: open, filled, canceled, triggered, rejected, marginCanceled. (notice no partial fill). This can be inferred, or guessed - we will arrive at this later.

Ultimately - what is more important are two criterions: i) complete and ii) adequately granular. The first criterion is that everything is covered. The second means that there are enough states to distinguish between orders relevant for the class of strategies we intend to run. The simplest being: OPEN/CLOSE. We will choose abit more practical, the following states:

ORDER_STATUS = "ord_status"

ORDER_STATUS_PENDING = "PENDING"

ORDER_STATUS_NEW = "NEW"

ORDER_STATUS_PARTIAL = "PARTIAL"

ORDER_STATUS_FILLED = "FILLED"

ORDER_STATUS_REJECTED = "REJECTED"

ORDER_STATUS_CANCELLED = "CANCELLED"

ORDER_STATUS_EXPIRED = "EXPIRED"So in the binance world, we have EXPIRED, EXPIRED_IN_MATCH > EXPIRED, and in the hyperliquid world we have canceled, marginCanceled > CANCELLED, as well as open > NEW, PARTIAL.

Okay so that’s it with order states. Now, on to order messaging.

Before we talk about order messaging, let’s talk about the different software pieces. The one closest to the exchange (and furthers from our strategy) are the wrappers that act as SDKs. These implement the API endpoints specific to the exchange, establish connections, and adhere to their messaging protocols to retrieve all relevant information about the exchange.

Since different information are provided by different endpoints, with different schemas - it would be important for us to to do ‘standardization’ of the data formats. This would allow our trading strategy to exist in the language of our internal state representations. This part is crucial, as it allows us to trade strategy X on exchange A and exchange B with the same code. This is the abstraction that allows us to be ‘unconcerned’ about exchange-specific schema. Or, separation of concerns.

Since handling a group of wrappers becomes somewhat unwieldy, these wrappers are not directly exposed to the trading agent. The wrappers are accessed through a gateway object, which does request routing (calling the correct wrapper), defines exchange API interface (the methods that each exchange should implement) and standardization (such as ensuring type correctness).

For instance, you would not want to get portfolio position as Decimal on one exchange and float on the other - when computing net asset delta, pos_a + pos_b would give you operand errors. This is waived by the gateway standard types.

At this point, a trading strategy might directly connect to the gateway, with its implementation completely exchange independent. This is because the gateway supports normalization and proper routing mechanisms. We showed how the gateway easily implements a momentum portfolio in our previous posts, regardless of exchange.

For the line by line implementation, please refer to the quantpylib repo code. I will walk through the important parts. At the wrapper level, we may have something like this:

async def printer(data):

print(data)

async def main():

from quantpylib.wrappers.hyperliquid import Hyperliquid

hpl = Hyperliquid(**config_keys['hyperliquid'])

await hpl.init_client()

await hpl.order_updates_subscribe(handler=printer)

await asyncio.sleep(1e9)

and we get messages like this:

[{'order': {'coin': 'SOL', 'side': 'B', 'limitPx': '100.0', 'sz': '1.0', 'oid': 37009173521, 'timestamp': 1725612541970, 'origSz': '1.0'}, 'status': 'open', 'statusTimestamp': 1725612541970}]

[{'order': {'coin': 'SOL', 'side': 'A', 'limitPx': '200.0', 'sz': '1.0', 'oid': 37009203755, 'timestamp': 1725612561033, 'origSz': '1.0'}, 'status': 'open', 'statusTimestamp': 1725612561033}]This is at the wrapper level (remember, this acts as a connector and is exchange specific). At the gateway level we want some optionality for standardization of schema…which puts us back at internal state representations.

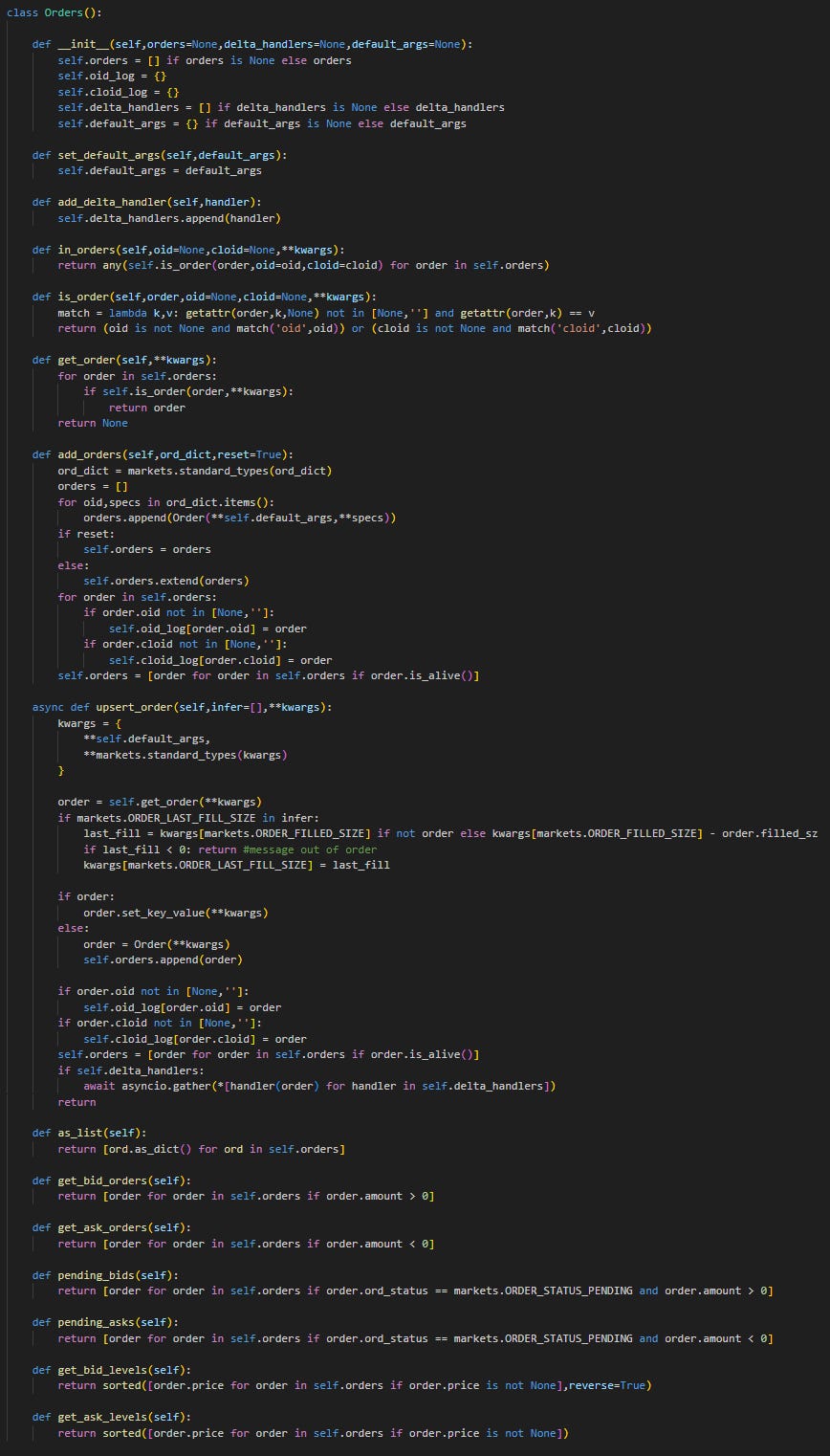

So let us create an internal Order object, and an Orders object (which is an order collection):

Now, our job at the normalization stage is to ensure that this Orders object is as accurate of a representation of exchange order state. At the gateway-wrapper level methods though, this dependency is not exposed to the caller:

async def printer(data):

pprint(data)

async def main():

from quantpylib.gateway.master import Gateway

gateway = Gateway(config_keys=config_keys)

await gateway.init_clients()

await gateway.orders.orders_mirror(exc='hyperliquid',on_update=printer)

order = {

"ticker":"SOL",

"amount":1,

"price":100,

"exc":"hyperliquid"

}

res = await gateway.executor.limit_order(**order)

await asyncio.sleep(100)

>>

[{'amount': Decimal('1.0'),

'cloid': '',

'exc': 'hyperliquid',

'filled_sz': Decimal('0.0'),

'last_fill_sz': Decimal('0.0'),

'oid': '37021116528',

'ord_status': 'NEW',

'ord_type': None,

'price': Decimal('100.0'),

'price_match': None,

'reduce_only': None,

'sl': None,

'ticker': 'SOL',

'tif': None,

'timestamp': 1725622525326,

'tp': None}]Some things to notice:

i) the default behavior is to return a list of dictionaries of order info, not a Orders object. This hides the dependency from the caller of the hpl wrapper.

ii) The orders_mirror uses an implicit order_updates_subscribe to maintain a internal orders page mirror. The underlying subscription is a raw data schema, and the mirroring is a utility function written on top of exchange-specific schema.

This is important because the wrapper is intended to be used as all of i) standalone sdk, ii) gateway client and iii) feed + oms. By ensuring that the API requests are abstracted away from the utility functions, the code is open for extension and closed for modification.

now for the OMS…

while the gateway itself is already useful, it lacks abit of flair and alot of code has to be implemented in a typical strategy state maintenance. Additionally, the gateway is a representation of our exchange states - but as we have pointed out, the trading agent itself has different states in relation to our strategies. On a different point, the general class of trading strategies is not exchange specific and requires cross-exchange specification handling. This of course, would be inappropriate to put at the gateway level.

So for instance, we might want to submit a maker-taker order on binance-hyperliquid that submits the taker order when the maker is (partially) filled. Obviously, putting this inside either the binance or hyperliquid logic would be insane.

So the OMS handles these `cross-border` logic, in a way that keeps in mind the utility at the trading agent level, while exploiting the flexibility of our gateway.

It turns out that as a reward for keeping internal order states, it is trivial for us to order page changes/deltas - everytime we insert/update (upsert) an order, we can broadcast this to a collection of registered handlers (inside the Orders class):

async def upsert_order(self,infer=[],**kwargs):

kwargs = {

**self.default_args,

**markets.standard_types(kwargs)

}

order = self.get_order(**kwargs)

if markets.ORDER_LAST_FILL_SIZE in infer:

last_fill = kwargs[markets.ORDER_FILLED_SIZE] if not order else kwargs[markets.ORDER_FILLED_SIZE] - order.filled_sz

if last_fill < 0: return #message out of order

kwargs[markets.ORDER_LAST_FILL_SIZE] = last_fill

if order:

order.set_key_value(**kwargs)

else:

order = Order(**kwargs)

self.orders.append(order)

if order.oid not in [None,'']:

self.oid_log[order.oid] = order

if order.cloid not in [None,'']:

self.cloid_log[order.cloid] = order

self.orders = [order for order in self.orders if order.is_alive()]

if self.delta_handlers:

await asyncio.gather(*[handler(order) for handler in self.delta_handlers])

returnThis has the added benefit that now our order delta broadcasts are also standardized across exchanges, as long as each wrapper correctly maintains the Orders object.

We can clearly see that the mirror logic in Binance and the order socket updates contain more information relative to Hyperliquid:

but since the delta updates are pushed with reference to the internal state changes rather than what the exchange sends us - we have more normalized data formats.

Now the OMS can take a gateway executor:

and when asked to submit a limit-order, a PENDING state order is inserted into the orders page. Now, our order state life cycle under the OMS looks like this:

from PENDING > NEW > FILLED. When receiving an exchange ACK - we can simply use the client order IDS in our upsert order to update the pending record. You can see that the oid is only updated on a ACK update. The pending order only has cloid. Additionally, because of the local states, comparing to the raw schema:

[{'order': {'coin': 'SOL', 'side': 'B', 'limitPx': '100.0', 'sz': '1.0', 'oid': 37009173521, 'timestamp': 1725612541970, 'origSz': '1.0'}, 'status': 'open', 'statusTimestamp': 1725612541970}]we have additional information about last filled size, etc - which in the hpl API - order updates only give information as to the accumulated fill size. The printed messages…

{'amount': Decimal('1'),

'cloid': '0x5c33360ba06c67fb5d41508482efa391',

'exc': 'hyperliquid',

'filled_sz': Decimal('0'),

'last_fill_sz': Decimal('0'),

'oid': None,

'ord_status': 'PENDING',

'ord_type': None,

'price': Decimal('130'),

'price_match': None,

'reduce_only': None,

'sl': None,

'ticker': 'SOL',

'tif': None,

'timestamp': 1725615717034,

'tp': None}

{'amount': Decimal('1.0'),

'cloid': '0x5c33360ba06c67fb5d41508482efa391',

'exc': 'hyperliquid',

'filled_sz': Decimal('0.0'),

'last_fill_sz': Decimal('0.0'),

'oid': '37012909160',

'ord_status': 'NEW',

'ord_type': None,

'price': Decimal('130.0'),

'price_match': None,

'reduce_only': None,

'sl': None,

'ticker': 'SOL',

'tif': None,

'timestamp': 1725615716314,

'tp': None}

{'amount': Decimal('1.0'),

'cloid': '0x5c33360ba06c67fb5d41508482efa391',

'exc': 'hyperliquid',

'filled_sz': Decimal('1.0'),

'last_fill_sz': Decimal('1.0'),

'oid': '37012909160',

'ord_status': 'FILLED',

'ord_type': None,

'price': Decimal('130.0'),

'price_match': None,

'reduce_only': None,

'sl': None,

'ticker': 'SOL',

'tif': None,

'timestamp': 1725615716314,

'tp': None}Okay, I think that is it for now, I am still writing the OMS code and it is not yet up on the official documentation, but the use cases, walkthroughs and docs should be up soon. I just thought that this is a good lesson in coding principles for writing extensible systems.

Of course, I still have alot to work on in the OMS - one would be error-correcting fallbacks during socket reconnections.

Hope you liked the article - I think it is abit messy, but considering that this article stretches across so many layers of the architecture, it is abit of a challenge.

Quantpylib is intended for annual readers: