compile-time eval + lock-free equals du du du du

Du du du du, Max Verstappen~. combining compile time eval and the cache conscious ringbuffer to achieve sub100ns p99-logging latency

Sorry about that title, f1 cars are racing as I type this post where I live.

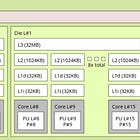

In the last notes, we talked about the micro-architecture and the cache conscious design of the ringbuffer.

We explored closure-based logging and its deficiencies for low-latency logging systems due to heap allocations on the hot path and unreliable optimizations by the compiler.

We will round up this post, and discuss how to adapt these ideas to achieve a performant logging setup. The full code, examples and simple benchmarking code has been uploaded to quantcplib. It’s a couple thousand lines.

We have already talked about optimizations at the level of the ringbuffer, and how lock-free inter-thread synchronization for message passing can be optimized via a multitude of techniques. We also previously discussed in our post what cost (invocation latency) is imperative in the logging problem. We will not further discuss these ideas, and instead talk about two new ideas.

Compile-Time Evaluation for Efficient Logging

One of the biggest costs in printf-style logging is format string parsing. At runtime, the logger has to scan the string, identify placeholders (like %d or %s) count parameters, and handle type conversions.

Consider a LOG statement:

LOG(INFO, “Protocol: %s, Host: %s, Port: %d”, protocol, host, conn_port);

that specifies when the application has established a network connection. This statement consists of the following:

LOG ‘function’ name

INFO log level

The FORMAT string, “Protocol… %d”

ARGUMENTS namely protocol, host and conn_port

METADATA such as filename.cpp[15] for line 15, timestamp

Let’s call the call site to be where the log is called in our source code, and the function invocation/invocation site to be each instance of a function call to LOG().

Here, we can recognize that there a number of static data that does not vary between function invocations, and are immutable w.r.t. call site. In particular, the log severity, number of arguments, filename, line number are all static data. On the other hand, the values carried by the argument, timestamp metadata are dynamic.

Compile time logic can help to move as little data as possible across the thread boundaries. Necessarily, all dynamic values have to be transferred at runtime. We want to minimize synchronization overhead of remaining static data.

Compile-time computation means the compiler evaluates expressions during build time, making runtime code leaner, as well as enabling the compiler to generate aggressive code optimizations.

We can do the some of the log-work at compile time using constexpr functions:

Count the number of parameters in the format string.

Infer the type of each parameter (string vs. non-string) to guide storage.

Generate a static data structure that bundles file/line info, severity, and even a function pointer for later unloading.

Static variables

The properties of static log metadata lends great use to static variables in cpp, which are powerful features that control the lifetime, scope, and initialization of data.

Unlike automatic (local) variables, which are created and destroyed with each function call or block, static variables persist for the duration of the program. They are initialized only once, which makes them ideal for caching constants, singletons, or per-thread resources in performance-sensitive code like our high-performance logger.

We are now a hop, skip and jump away from making a compile-time LOG ‘function’, note the quotes. Instead of LOG being a function, we define special macros such that the LOG statement expands into a code block with static variable initialization for static data.

constexpr functions and template metaprogramming gives us the ability to count the number and type of these parameters at zero cost. The static_data is only created once and cached in the code block. Template functions generate specialized code to compute argument sizes at runtime. Each LogEntry only needs to communicate (memcpy) 8 bytes pointer to data, as well as specialized memcpy wrappers. The data is put into pre-allocated, byte oriented ringbuffer in a claim-commit sequence at the call site.

This reduces the runtime logic to just minimal memcpy of runtime data (large string logging with no parameters are zero-cost!) and one strlen call per-string argument, plus one lock-free synchronization for message handoff (and for all purposes, wait-free for sane logging rates).

I will leave the details of the implementation for readers to check out on the quantcplib repo, since the template-metaprogramming part, is well, very non-trivial to put on text.

As for the byte-oriented buffer, we just have to specialize the typed-ringbuffer to contain raw bytes, and allow sequence claims over N slots. Since we know the type and order of parameters, we can easily compact the byte representation of arguments [data], [lenStr,strData…] onto byte sequences than can be processed at the log writer thread.

For the logic of the writer thread, per-thread spsc buffer merge, handling wraparounds…I’ll leave that to you to explore in the repo.

That concludes our logger series.

Happy Weekend all :)